Rapid change in the field of advanced driver-assistance systems (ADAS) and automated driving (AD) presents developers with a slew of challenges that stem from the inherent limitations of relying solely on real-world data. These challenges include the rarity of critical edge cases, the high costs and complexities of data collection and labeling, and the difficulty in achieving the level of granularity needed for high-performing machine learning (ML) models.

This blog post delves into how synthetic data, when used effectively at scale, not only bridges the performance gap between rare and common class scenarios but also presents a scalable, efficient solution that complements real-world data with diverse edge cases, thereby enhancing the overall performance of ML models in the domain of ADAS and AD development. Through an exploration of its potential to overcome real-world data limitations, reduce labeling costs, and improve model performance, this discussion highlights the pivotal role of synthetic data in addressing the multifaceted challenges of developing robust, reliable ADAS and AD technologies.

Overcoming Real-World Data Limitations

In the context of ADAS and AD development, real-world data faces three limitations:

- An ADAS and AD system needs to be robust in a diverse set of scenarios, but not all scenarios are represented in real-world data.

- Many ground-truth labels are difficult to obtain in the real world, especially “dense” labels.

- Real data collection and labeling are costly.

Enrich diversity of real-world data

Synthetic data bridges the gap between rare- and common-class data by upsampling rare classes in a simulation. For example, imagine that real-world data collected on the road does not include many cyclists, making them a rare class in your ADAS and AD perception system training dataset. Due to a lack of data, the system would perform poorly when detecting cyclists.

Using synthetic data, we can upsample cyclist occurrences so that each synthetic frame has a few cyclists. Because class and scenario distributions are completely controllable in simulation, teams can tune synthetic data to target an ML model’s shortcomings and boost performance.

Complement real data

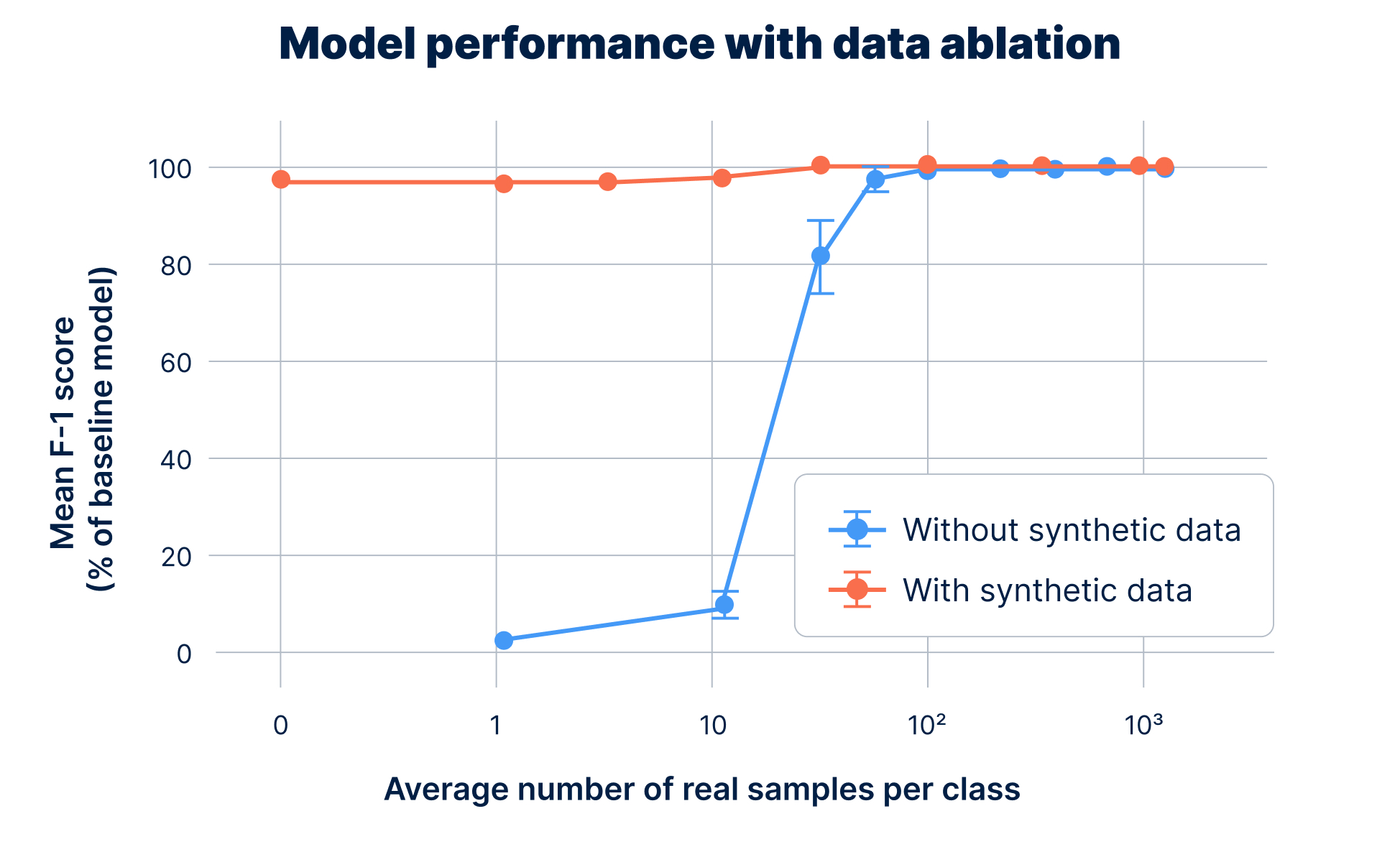

Collecting real-world data and labeling it is expensive. Synthetic data reduces a team’s dependency on the sheer amount of labeled real data they would need to collect to achieve similar results. In addition to improving an existing ML model by training it in conjunction with synthetic data, we can also use synthetic data as a substitute, reducing the cost of real data collection and labeling.

In Applied Intuition’s case study Using Synthetic Data to Improve Traffic Sign Classification and Achieve Regulatory Compliance, incorporating synthetic data reduced the need for real data by 90% while achieving similar model performance.

Reduce labeling costs, improve performance

ADAS and AD fleets can collect tens of thousands of raw frames with ease, but labeling them can be expensive. Dense labels are commonly used in perception tasks such as semantic segmentation, depth estimation, and optical flow estimation. Dense labeling provides more granularity and detail but is a difficult, time-consuming, and expensive process when left to humans to do manually, costing as much as 90 thousand dollars in lost productivity per dataset. Additionally, the quality of human labeling can suffer from human biases or noise.

Synthetic data, on the other hand, is generated from an underlying virtual world for which perfect information is available. For example, we can know exactly how far a sensor is from a pedestrian mesh in front of the sensor. We can also know the semantic class of an actor hit by a lidar point. Obtaining synthetic labels is just a matter of querying the virtual world during the rendering process.

How to Use Synthetic Data

Synthetic data and real data are not interchangeable, and using synthetic data requires care. There is a domain gap between synthetic and real data. Training ADAS and AD perception models on synthetic data alone and evaluating them on real data typically does not work well, as the domain gap is too big. How should teams use synthetic data?

One approach is to combine mixed training with fine-tuning. This means that the ML model is trained on a mix of synthetic and real data, and then a subset of model weights are fine-tuned on real data only. The idea behind this approach is to expose the model to a diverse class distribution during training and then fine-tune it to the target domain where the model will actually be deployed.

A rule of thumb is that the model trained on both synthetic and real data should be lower bound by its performance when trained on just real data. If the model yields worse performance, this usually means that the synthetic data itself is problematic. There are two common failure modes:

- Data domain gap: This means that the underlying virtual world used to generate the synthetic data is too unrealistic. For example, the sensor simulation is of low quality or the virtual environment itself is unrealistic with low-fidelity assets.

- Label domain gap: This means that there is a discrepancy between synthetic labels and real labels. This could happen if human labelers label the dataset with a rule different from that used for synthetic labels. For example, for lane marking detection, if human labelers always annotate the left-most boundary of a lane marking while synthetic data always annotates the centerline, this results in a label domain gap.

To reduce the label domain gap, teams should align the labeling procedure of synthetic data to that of human labelers. To reduce the data domain gap, the first step is to change the underlying virtual environment and sensor models. Then, to further improve performance, teams should deploy a domain adaptation technique. Domain adaptation is a rich research area with new methods appearing frequently. For image datasets, a simple, non-parametric technique is Fourier Domain Adaptation, which swaps the low-frequency domain of synthetic data with that of real data. This approach makes the camera tone of synthetic data visually similar to that of real data and is effective in practice. More complex and parametric techniques use style transfer based on generative adversarial networks such as cycle-consistent adversarial domain adaptation (CyCADA).

Applied Intuition’s engineering team helps ADAS and AD development teams determine a suitable domain adaptation technique for their specific perception models.

Applied Intuition’s Approach to Using Synthetic Data

Instead of requiring ADAS and AD development teams to hand-craft each synthetic simulation scenario, Applied Intuition’s Synthetic Datasets product uses a high-level language that defines the distribution of a synthetic dataset which is automatically compiled into multiple individual scenarios for simulation. These synthetic datasets include:

- Rich labels: Applied Intuition supports generic computer vision labels like 2D and 3D bounding boxes, ADAS and AD-specific labels like lane marking and parking space annotations, as well as dense labels that are especially expensive for human labelers to implement (e.g., depth, optical flow, and semantic segmentation).

- Scalability: Dataset generation supports deployment on popular cloud frameworks, allowing teams to horizontally scale by running multiple simulations in parallel.

- Ease of use: Applied Intuition stores the generated dataset in a nuScenes-like data format. This allows teams to plug the dataset into a data loader of any popular deep learning framework with ease. Applied Intuition also delivers an extension to the nuScenes software development kit (SDK) for teams to easily visualize and modify the generated dataset.

- Integration support: The lifecycle of synthetic dataset creation does not stop when data and labels are generated. Synthetic Datasets also help teams integrate the generated dataset with an existing ML model and verify whether model performance has improved. Synthetic Datasets support common domain adaptation techniques in Applied Intuition’s SDK. Our team works directly with customers to identify why synthetic data does not improve model performance out of the box and helps integrate it with the specific model that customers wish to improve.

Effective use of synthetic data at scale offers a compelling solution to the data challenges encountered in ADAS and AD development. By overcoming the limitations of real-world data through enriched diversity, cost-effective labeling, and scalability, synthetic data not only addresses the immediate needs of ML model performance but also paves the way for innovative advancements in artificial intelligence (AI) training methodologies.

Applied Intuition’s approach highlights the importance of integrating synthetic and real data in a thoughtful manner, emphasizing the need for domain adaptation and careful consideration of potential domain gaps to ensure the seamless deployment of perception models in real-world scenarios. As we continue to explore the frontiers of synthetic data, it becomes clear that its potential is not just in augmenting existing datasets but in fundamentally transforming the way ADAS and AD teams approach ML model development, training, and refinement.

Read more about this topic

- Applied Intuition: “Why 3D Worlds Matter for ADAS and AD Development”

- Applied Intuition: “Case Study: Improving Object Detection Performance by Leveraging Synthetic Data”

- Applied Intuition via CVPR: “Improving Rare Classes on nuScenes LiDAR Segmentation Through Targeted Domain Adaptation”

.webp)

.webp)