Open Standards and Their Critical Role in AV/ADAS Development

Autonomous vehicle (AV) testing language is fragmented, which makes it difficult for the automotive industry to collaborate and accelerate the development process. Open standards expand supply options for OEMs and AV programs while addressing safety concerns. Without these standards, automakers are locked into working with a single vendor or face challenges in extending the functionality of their tools due to differences in scenario description languages or data annotation formats across vendors. It is also incredibly inefficient if development teams have to individually define how each software component should be assessed to meet safety expectations and translate it into a production-ready product. When ADAS/AV development already requires significant time and resources, it would make sense to have common standards that developers can use to evaluate their safety-critical systems.

Open standards also enable faster integration through guaranteed interoperability between systems and allow AV programs to reuse, share, and collaborate across teams within the same organization and across different organizations such as OEMs, suppliers, and regulatory authorities (Table 1).

The automotive industry seems to be receptive to the idea of open standardization. In recent years, a substantial portion of the simulation standards such as OpenX and OSI standards have been transferred from private companies to the Association for Standardization of Automation and Measuring Systems (ASAM), which is indicative of the industry’s support for an independent, non-profit organization to drive the definition of these standards for the future.

Applied Intuition’s is a supporter of open standards for simulation tests and works closely with ASAM standards. In this blog, the Applied Intuition’s team summarizes the current landscape of open standards for simulations, their limitations, and the future scope for these standards. We also share our approach to tools development with respect to the open standards.

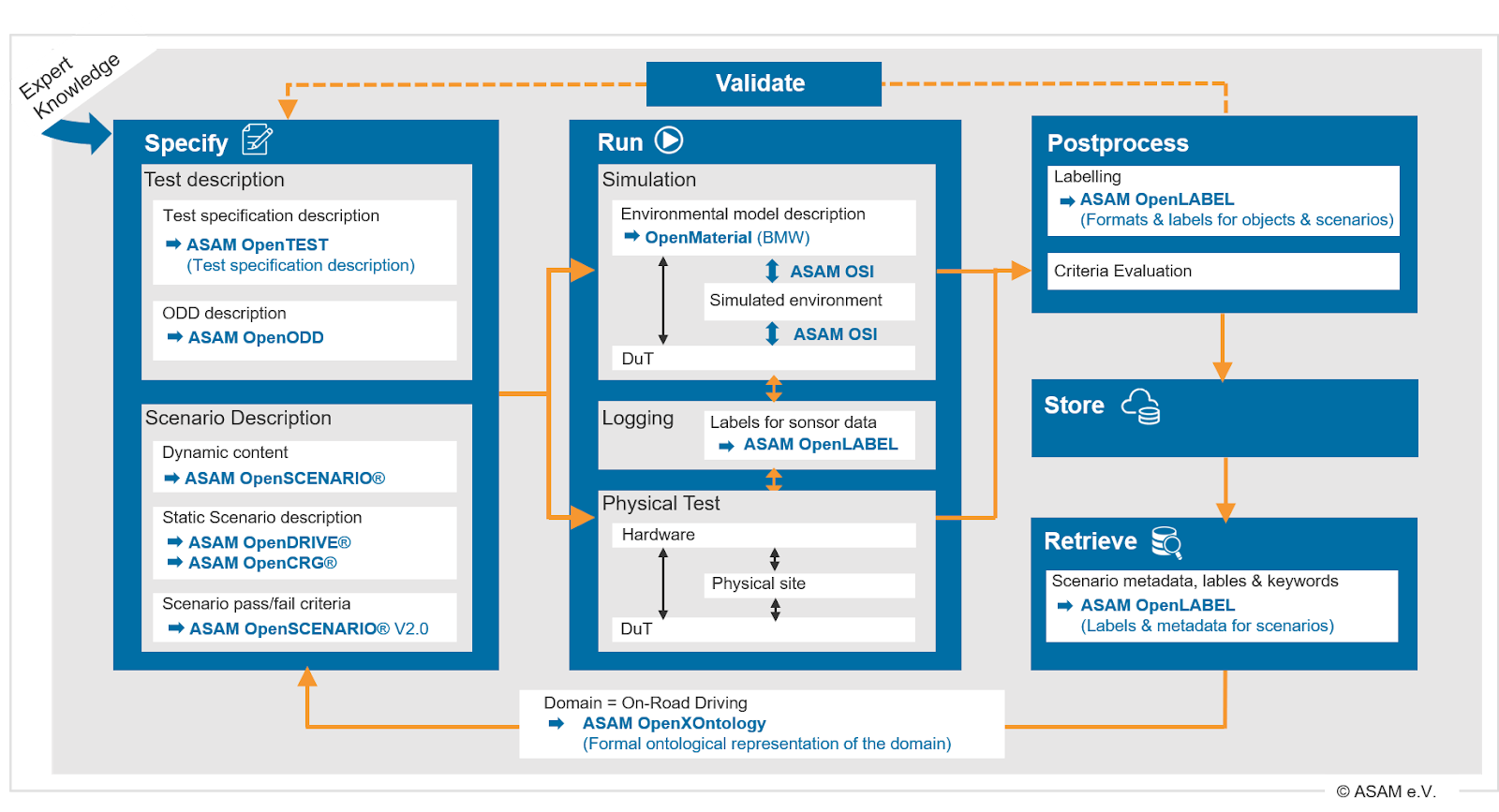

ASAM OpenX standards facilitate collaborative scenario-based testing workflows (Figure 1). The vision is to facilitate end-to-end validation workflows including scenario specification, test description, simulation, and labeling of object data from simulation tests in order to make data retrievals exchangeable. Further, these standards are supported by the OpenX Ontology project to create an overall domain model so that all standards speak the same language.

Table 2 shows the high level summary of OpenX projects commonly used in simulation tests.

The current set of OpenX standards is designed to fulfill the need of lower autonomy use cases and there are some gaps for these standards to be useful for more complex systems applications.

For example, the current OpenDRIVE format standard has some inconsistencies and undefined conversion heuristics that make it difficult to use. The standard’s lane definition schema, for example, makes it challenging to identify what the road reference track should be for the real world roads during the conversion of HD map formats to the OpenDRIVE format. Realistic roads (and particularly complex junctions) could be difficult to constrain to a limited subset of geometries available in the standard (Figure 2); in such cases, extensive interpolation and optimization are required to break lanes from high-definition maps into chunks that could be closely approximated by the geometries provided by OpenDRIVE. But since interpolation will not precisely represent each point in the original HD map, the OpenDRIVE format will lose accuracy in the lane representation, which lowers the fidelity of the map in the simulation. Similar issues may be seen for road elevation and crossfall, where both are also represented as a parameterized function relative to the reference curve.

The current OpenSCENARIO 1.0 supports a low-level specification format for simulation tools. In its current state, it is difficult to apply the standard to complex autonomy use cases due to its lack of abstract definition, under-specified maneuvers and perception, and missing definitions for scenario success criteria. Today's testing requirements of autonomous driving need a higher level of scene description and representation with parameter spaces to enable a functional-to-concrete scenario workflow as proposed by the Pegasus project. In terms of maneuvers, OpenSCENARIO 1.0 is underspecified in areas such as vehicle dynamics, which makes it difficult to ensure similar state trajectories across different simulators because different algorithms and model fidelity may be used. Similarly, the perception specification language of OpenSCENARIO is insufficient. Existing fields from the Visibility Action Class such as graphics, traffic, and sensors, have little explanation of their intended use. Specifications for perceptual models are necessary to ensure consistent behaviors of actors in response to changing environments and scenes. Finally, current scenarios do not have clear success criteria, which should be defined in the context of autonomous systems assessment. Because the failure of scenario tests should prevent the offending software from deployment to production vehicles, the value of the standard could be quite limited without a language for encoding scenario success or failure.

Furthermore, there is no common object or scenario labeling standard in the industry today despite categorizations and descriptions of training and test datasets to be the fundamental building blocks of the perception systems. The lack of standardization results in different issues such as different interpretation of surroundings by different autonomous driving systems, impossibility to share data across organizations, or lower quality in data annotation. OpenLABEL is an effort initiated to standardize annotation methodology, structure, and description language.

Efforts are in progress to improve these simulation test specification standards. At a macro level, project teams are working to revise features required for higher levels of autonomy and are exploring new standards and concepts to further democratize simulation-based testing tools (Table 3).

Applied Intuition’s simulation tools support many of the existing standards including OpenDRIVE, OpenSCENARIO, OSI, and FMI. As noted earlier, the current scope of these standards is focused on specific use cases and does not cover all test scenarios required to make safety cases in different ODDs. Thus, Applied Intuition has developed support beyond current standards to cover broad use cases while standards are being developed. Applied Intuition’s is closely following the evolution of these standards and our support for the standards will continue to broaden as they become more defined. Additionally, Applied Intuition’s scenario API wraps around functional scenario definitions including OpenScenario 2.0 and beyond. To learn more about Applied Intuition’s tools including the scenario API, talk to Applied Intuition’s engineering team.